Summary: Siri and IBM Watson for everyone? Organizations might soon be able to rent brain power by the hour from the cloud – creating a new marketplace for contextually-aware and adaptive applications powered by software programs that learn from real-world human user interactions. Our educational and workflow experiences might soon access cogntive computing applications to learn more effectively and make better decisions on complex issues.

Summary: Siri and IBM Watson for everyone? Organizations might soon be able to rent brain power by the hour from the cloud – creating a new marketplace for contextually-aware and adaptive applications powered by software programs that learn from real-world human user interactions. Our educational and workflow experiences might soon access cogntive computing applications to learn more effectively and make better decisions on complex issues.

Companies are starting to experiment with business models to deliver cloud based cognition in the form of ‘contextual and cognitive computing’ via API Engines and stand alone software solutions.

Contextual & Cognitive Computing

Cognition as a Service is just a buzzword today. It is far from defined! The most likely path towards bringing cognition level abilities to organizations will likely pass through stages. The first will be an evolution towards contextual experiences followed by more sophisticated ‘IBM Watson’-like applications.

Contextual web experiences move us from information being delivered by ‘keyword’ connections to a more personalized sense of the right ‘context’ for our lives based on: location, activity, life experiences and preferences, et al. Contextual experiences are more personalized and certainly ‘learn’ from interactions with users – but there is a new paradigm of ‘cognitive’ systems that elevate the experience.

Cognitive Computing is an era where software systems learn on their own and can teach themselves how to improve their performance. IBM Watson is today’s most sophisticated cognitive application. Watson is currently providing decision support for cancer treatments at Sloan Kettering, financial service support for companies such as ANZ and CitiGroup, and working to evolve the retail customer experience for Northface.

Cognition as a Service?

In practical terms this means an organization might be able to buy ‘as a service’ (e.g. not an application they paid to develop or maintain) – natural language processing for human-like Question & Answer interactions.

Companies pushing both of these capabilities are simultaneously trying to improve performance, integration and business model design. Early industry adopters will range from health, finance, energy, education, et al. Industries with connected data and a need for augmenting human knowledge building. Early adopters industries will likely have lots of data and compliance heavy regulatory frameworks.

The cloud-based business models of ‘software-as-service’ and ‘platform/infrastructure-as-service’ are the most likely first path for contextual and cognitive platforms. Startups such as Expected Labs are bringing their API engine MindmeldAPI into the marketplace. IBM Watson is hedging its bets by delivering stand alone solutions and also opening up its API to developers.

Developers are just now getting their hands dirty with advanced contextual computing applications. Truly transformational applications will likely emerge 2015-2025.

Companies to Watch:

AlchemyAPI, Declara, Intelligent Artificats, Grok, Saffron, Stremor PlexiNLP and Vicarious are companies with products designed to build knowledge graphs and natural language interactions that have contextual and cognitive computing style capabilities.

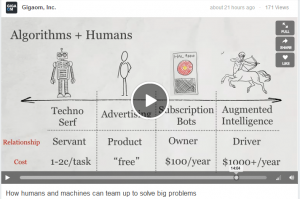

Video to Watch – Range of Business Models?

Sean Gourley – Founder of Quid has been wrapping his head around the future of mankind working ‘with’ machines for several years. I’ve seen Sean’s talk evolve over two years– and it continues to be among the most solid framings of this massive transition towards ‘augmented intelligence.

This is a great talk by Sean Gourley from the March 2014 GigaOm Data Structure Conference.

***

I’ve posted Expected Labs Mindmeld videos here

***

Looking for something more mainstream and business oriented:

Garry’s Diigo Tags on: IBM Watson;